-

Schaden & Unfall

Schaden & Unfall ÜberblickRückversicherungslösungenTrending Topic

Schaden & Unfall

Wir bieten eine umfassende Palette von Rückversicherungslösungen verbunden mit der Expertise eines kompetenten Underwritingteams.

-

Leben & Kranken

Leben & Kranken ÜberblickUnsere AngeboteUnderwritingTraining & Events

Leben & Kranken

Wir bieten eine umfassende Palette von Rückversicherungsprodukten und das Fachwissen unseres qualifizierten Rückversicherungsteams.

-

Unsere Expertise

Unsere Expertise ÜberblickUnsere Expertise

Knowledge Center

Unser globales Expertenteam teilt hier sein Wissen zu aktuellen Themen der Versicherungsbranche.

-

Über uns

Über uns ÜberblickCorporate InformationESG bei der Gen Re

Über uns

Die Gen Re unterstützt Versicherungsunternehmen mit maßgeschneiderten Rückversicherungslösungen in den Bereichen Leben & Kranken und Schaden & Unfall.

- Careers Careers

Understanding the Importance of Algorithmic Fairness

17. Februar 2022

Frank Schmid

English

The discourse around algorithm fairness has garnered increasing attention throughout the insurance industry. As the use of machine learning has become more common, from marketing and underwriting to claims management, regulators and consumer rights organisations have raised questions about the ethical risks posed by such technology.

A catalyst of this social discourse was an article entitled Machine Bias published by the investigative news organisation ProPublica.1 The paper focussed criticism on a law case management and decision support tool used in the U.S. judicial system called Correctional Offender Management Profiling for Alternative Sanctions, or COMPAS.

A conclusion of the academic research surrounding this debate is that there are competing definitions of fairness, and these definitions may be incompatible with one another.2 The concepts of fairness at the centre of the discussion are calibration (aka predictive parity) and classification parity (aka error rate balance). There is also the concept of anti-classification, which calls for sensitive (aka protected) attributes not to be explicitly used in decision-making.3

A stylized example, designed for educational purposes, illustrates the intrinsic incompatibility of predictive parity and error rate balance. The example uses the Adult dataset, which figures prominently in studies on machine learning. Gender is the sensitive attribute of choice. It is demonstrated that a classification model that satisfies predictive parity across two groups cannot satisfy error rate balance if the baseline rate of prevalence differs between the groups.4

The dataset, which is publicly available, comprises 48,842 anonymized records of annual income and personal information (such as age, gender, years of education, etc.) extracted from the 1994 U.S. Census database. The prediction task is to determine whether a person makes more than $50,000 a year.5

In the dataset, 24 percent of individuals are high earners. This baseline rate is higher for males (30 percent) than for females (11 percent). The dataset has been an object of research for its imbalance on gender, as females make up only 15 percent of high earners but 33 percent of the entire data set.

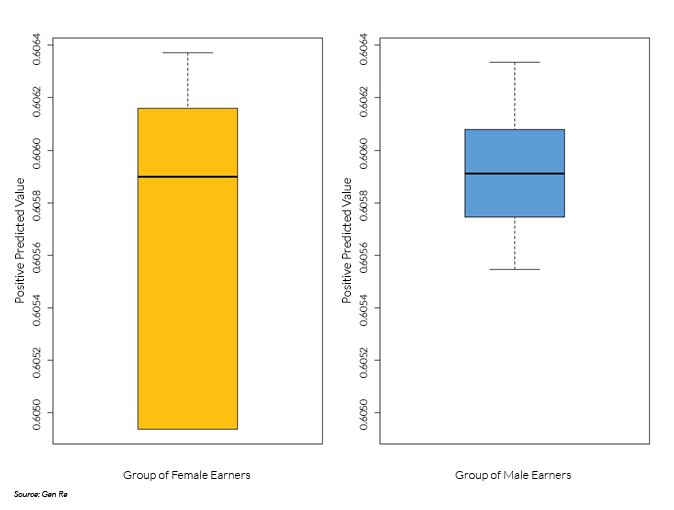

The algorithm6 satisfies predictive parity at a chosen threshold of predicted probability of being a high earner if in the category of predicted high earners the empirical probability of being a high earner is independent of group membership, where group membership is defined by the sensitive attribute.7 In plain English, the Positive Predictive Value (PPV), defined as the ratio of true positive count to the sum of true positive count and false positive count, must be equal across groups within an acceptable margin of statistical error. As shown in the boxplot below, for a threshold of 32 percent of predicted probability of being a high earner,8 the (mean and median of the) PPV of the high-earner category equals 60.6 percent for both groups.9 In the boxplot, the median is represented by a horizontal bar within the box, and the box marks the range between the first and third quartile.

Turning to the concept of error rate balance, the algorithm satisfies this concept of fairness at a chosen threshold of predicted probability of being a high earner if the false positive and false negative error rates, respectively, are equal across groups. A direct consequence of a scoring model satisfying predictive parity is that it violates error rate balance if the baseline prevalence differs across groups. Thus, despite satisfying the concept of predictive parity, the algorithm has a disparate impact on the two groups.10

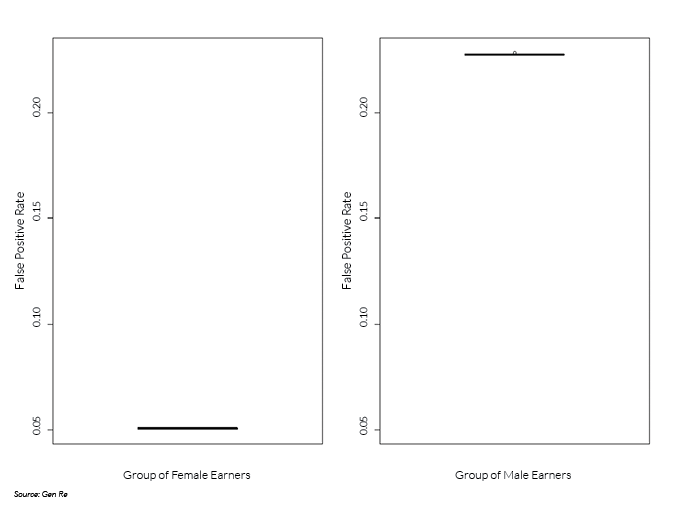

In the stylized example, the group of female earners has a lower rate of high income. Thus, in the presence of predictive parity, the group of female earners experiences a lower rate of false positives (see boxplot below). Compared to a false positive rate of 22.8 percent for the group of male earners (median, displayed as horizontal bar within box in right panel), this rate equals 5.1 percent for the group of female earners (left panel).

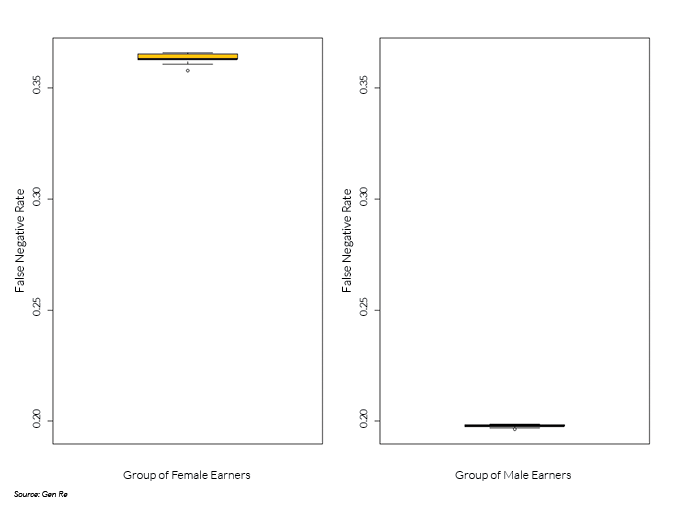

Correspondingly, the group of male earners experiences a lower rate of false negatives (see boxplot below). Compared to a false negative rate of 36.3 percent for the group of female earners (median, displayed as horizontal bar within box in left panel), this rate equals 19.8 percent for the group of male earners (right panel).

In applying algorithms, it is important to recognize the trade-offs between different concepts of fairness and the presence of disparate impacts. Fairness is ultimately a societal, not a statistical concept.11 Insurers have an opportunity to shape the discussion on algorithmic fairness by demonstrating awareness of potential societal implications of their algorithmic decision-making.

Endnotes

- Angwin, Julie, Jeff Larson, Surya Mattu, and Lauren Kirchner, (2016). “Machine Bias”. ProPublica, May 23. https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-sentencing.

- Corbett-Davies, Sam, Emma Pierson, Avi Feller, and Sharad Goel, (2016). “A computer program used for bail and sentencing decisions was labeled biased against blacks. It's actually not that clear.” Washington Post, October 17. https://www.washingtonpost.com/news/monkey-cage/wp/2016/10/17/can-an-algorithm-be-racist-our-analysis-is-more-cautious-than-propublicas/. See also a follow-up ProPublica article: Angwin, Julie, and Jeff Larson, (2016). “Bias in Criminal Risk Scores Is Mathematically Inevitable, Researchers Say”. ProPublica, December 30. https://www.propublica.org/article/bias-in-criminal-risk-scores-is-mathematically-inevitable-researchers-say.

- Corbett-Davies, Sam, and Shared Goel, (2018). “The Measure and Mismeasure of Fairness: A Critical Review of Fair Machine Learning”. Stanford Computational Policy Lab Working Paper, September 11. https://policylab.stanford.edu/projects/defining-and-designing-fair-algorithms.html.

- For a detailed, academic analysis, see Kleinberg, Jon, Sendhil Mullainathan, and Manish Raghavan, (2017). “Inherent Trade-Offs in the Fair Determination of Risk Scores”. 8th Innovations in Theoretical Computer Science Conference (ITCS 2017), 43:1-43:23. https://drops.dagstuhl.de/opus/volltexte/2017/8156/pdf/LIPIcs-ITCS-2017-43.pdf.

- Dataset and data dictionary are available in the UC Irvine Machine Learning Repository. https://archive.ics.uci.edu/ml/datasets/census+income.

- A cross-validated logistic LASSO is employed. There are 14 predictors, of which ‘fnlwgt’, ‘capital-gain’, and ‘capital-loss’ are discarded. The remaining 11 predictors result in 4,460 features after engineering.

- Chouldechova, Alexandra, (2017). “Fair prediction with disparate impact: A study of bias in recidivism prediction instruments”. Big Data 5:2, 153-163, DOI: 10:1089/big.2016.0047.

- A decision-maker may choose a threshold that balances the marginal cost of a false positive with the marginal cost of a false negative.

- The algorithm was run 50 times, and the boxplots display the dispersion of values across these 50 runs. Machine learning algorithms make use of random processes, for instance in splitting the set of observations into training and holdout data sets, and in splitting the training data set into cross-validation folds. Averaging across multiple runs mitigates the influence of randomness on the results.

- Ibid at Endnote 7.

- Ibid at Endnote 7.